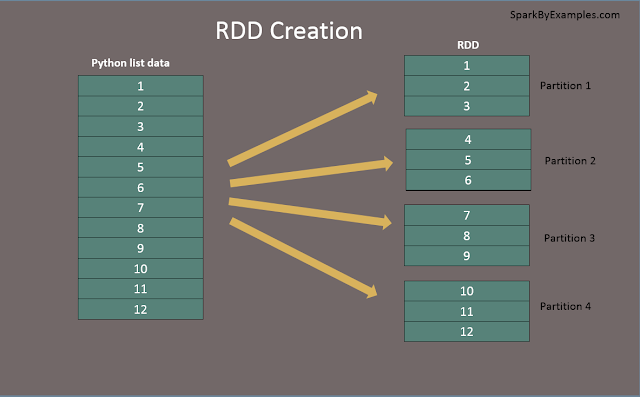

RDD create in 2 ways

parallelize

- ready from file

- parallelize

parallelize

par_rdd=sc.parallelize([1,2,3,4,5,6,7,8])

or

data = [1,2,3,4,5,6,7,8,9,10,11,12]

rdd=spark.sparkContext.parallelize(data)

type(par_rdd)

pyspark.rdd.RDD

par_rdd.collect()

[1, 2, 3, 4, 5, 6, 7, 8]

par_rdd.glom().collect()

[[1, 2, 3, 4, 5, 6, 7, 8]]

Note: By default, it will take 8 Partations

RDD Creation with Partition

#Set no particular partitions manually ----->> 3 Partitions

par_rdd=sc.parallelize([1,2,3,4,5,6,7,8],3)

par_rdd.glom().collect()

[[1, 2], [3, 4], [5, 6, 7, 8]]

[[1, 2], [3, 4], [5, 6, 7, 8]]

#Create empty RDD with partition

rdd2 = spark.sparkContext.parallelize([],10) ---->> #This creates 10 partitions

#creates empty RDD with no Partition

rdd=spark.sparkContext.emptyRDD

#Get no of partations

rdd3=sc.parallelize(range(1,20),5)

rdd3.collect()

[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19]

rdd3.glom().collect()[[1, 2, 3], [4, 5, 6, 7], [8, 9, 10, 11], [12, 13, 14, 15], [16, 17, 18, 19]]

print("initial partition count:"+str(rdd3.getNumPartitions()))initial partition count:5Repartition and Coalesce

reparRdd = rdd3.repartition(2)print("initial partition count:"+str(reparRdd.getNumPartitions()))initial partition count:2reparRdd.glom().collect()[[1, 2, 3, 8, 9, 10, 11, 12, 13, 14, 15], [4, 5, 6, 7, 16, 17, 18, 19]]=======================================pyspark.rdd.PipelinedRDD[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]#for particular range rdd3=sc.range(1,15)rdd3.glom().collect()[[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14]]14================================================================================