Data Store in 2 objects

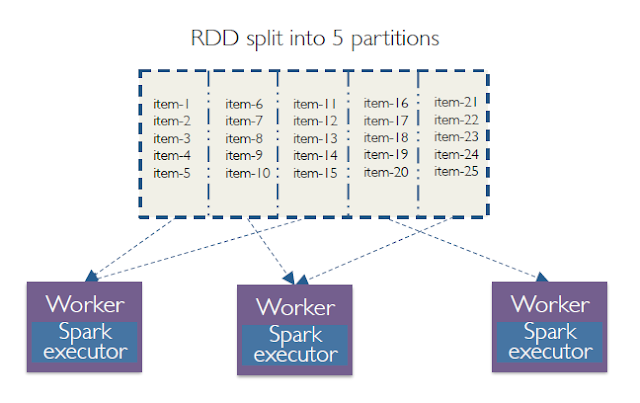

1.RDD (Resilient distributed data)--->> Unstructured data store --->> Immutable

2.Data frame -->> structured data store(schema, tables)

Before RDD --->> After DATA FRAME --->>> After DATA SETS

- RDD Using in --->> R, py-spark, Scala, java

- Data frame Using in --->> R, py-spark, Scala, java

- Data Sets using in--->> Scala/ java

Spark Context:

Spark Context is the primary point of entry for Spark capabilities. A Spark Context represents a Spark cluster's connection that is useful in building RDDs, accumulators, and broadcast variables on the cluster.

Available as ---->> "SC"